Build Ethical AI That Customers Trust: A Guide to Consent & Transparency in CX

As AI becomes a bigger part of customer experience, trust and ethics matter more than ever. This post explores how businesses can use AI responsibly to build lasting customer relationships.

74% of consumers expect businesses to be transparent when AI is used during service interactions. The message is clear, technology alone isn’t enough. Customers want clarity, control, and confidence.

We live in a world where we use artificial intelligence (AI) more often than we realize. From Siri to Alexa, customer experience (CX) has entered a new era. AI has now become an integral part of our space. This also means, enterprises must be transparent and accountable in their use of AI, particularly in how they engage with customers.

Hence, it is the right time to build ethical AI, AI that prioritizes consent, transparency, and trust. Deploying AI is still easy but the real challenge is making sure they work ethically. In this article, we are not trying to explain what AI can do in CX rather it’s about what it should do.

Let’s break it down.

What Is Ethical AI in Customer Experience?

AI ethics refers to a set of moral guidelines that help ensure artificial intelligence is created, used, and managed in a responsible and fair way. In a process, AI not only enhances customer experiences but it should also take care of their privacy, autonomy, and dignity.

With moral guidelines and technology being balanced in the right order, it will lead to systems that don’t just optimize metrics but also build relationships.

Why Ethical AI in CX Is Non-Negotiable

Every touchpoint of interaction with AI, leaves behind a record of data, an information of some sort. And when these systems are used without clear information, they can make people lose trust more quickly than any bad experience with a person might.

Imagine talking to a health chatbot to ask a personal question about a symptom you're experiencing. The chatbot responds to you, but it doesn't tell you where your information is saved, who might see it, or why it asked for your ZIP code. Later, you notice ads showing up that are about that same problem you experienced. No one warned you this would happen. Even if the tips were helpful, your trust in the app, and maybe all health technology, starts to fade.

Compare this to a human doctor, even if they were busy or forgot to return your call, you'd probably still trust them next time. With AI, because it's less clear how it makes decisions, it can feel colder and harder to forgive when things don’t go smoothly.

63% of customers are more open to sharing their data for a product or service they say they truly valued with AI. But “valued” doesn’t just mean faster, it means fair, safe, and transparent.

Therefore, using AI to improve customer experience without considering ethics is like giving a stranger access to your private diary, hoping they'll only read the parts that help them assist you. That's not how trust is built.

The 3 Pillars of Ethical AI in CX

The main building blocks of ethical AI are consent, transparency and trust. These principles help guide the creation and use of AI in a way that respects people's privacy, builds trust, and makes sure AI systems are fair, open, and answerable. Let’s take these one by one:

1. Consent: Give Customers the Power to Choose

Informed consent is more than just a simple message like a cookie notice. It means giving people real choice and control over how their personal information is gathered and used.

- Explain what data is used and why in plain language.

- Offer clear opt-in/out options for AI-driven services.

65% of users would stop buying from companies that don’t protect their data transparently. The same study shows that 70% of people are concerned about their data being used in automated decision-making.

2. Transparency: Make the Invisible Visible

Customers need to know when AI is being used, what it is doing, and the reasons behind its choices, so,

- Label AI-powered interactions clearly.

- Provide explanations behind AI recommendations or actions.

- Keep audit trails and use tools like Google’s What-If Tool for explainability.

Almost all company leaders, about 90%, believe that customers stop trusting brands when they aren’t honest and open. The role of AI should be as a co-pilot, which fundamentally is, transparent, supportive, and accountable. Its aim is to assist users in making better decisions, and not making decisions for them in the dark.

Just like a trusted co-pilot in the cockpit, AI should offer guidance and insight while keeping the human in command, with full visibility into how and why each course is charted.

3. Trust: Design Systems That Do No Harm

Trust isn’t built in a single moment, rather it’s earned gradually, through consistent actions that demonstrate fairness, transparency, and respect for users. Every ethical choice like how data is collected, how algorithms are trained, how recommendations are explained, together adds another layer of trust between users and the AI system.

But bias, discrimination, and data misuse are trust-killers because they do the opposite. Therefore following pointers must be kept in line:

- Regularly audit algorithms for bias.

- Create escalation paths for complex or emotionally charged queries.

- Prioritize empathetic AI systems that understand and adapt to customer sentiment.

75% of organizations believe that not being clear about how they use AI might cause customers to stop doing business with them. Sharing information about how decisions are made, like explaining why a chatbot suggests a particular product, helps customers feel more confident and trust the company.

How We Ethically Apply AI in CX

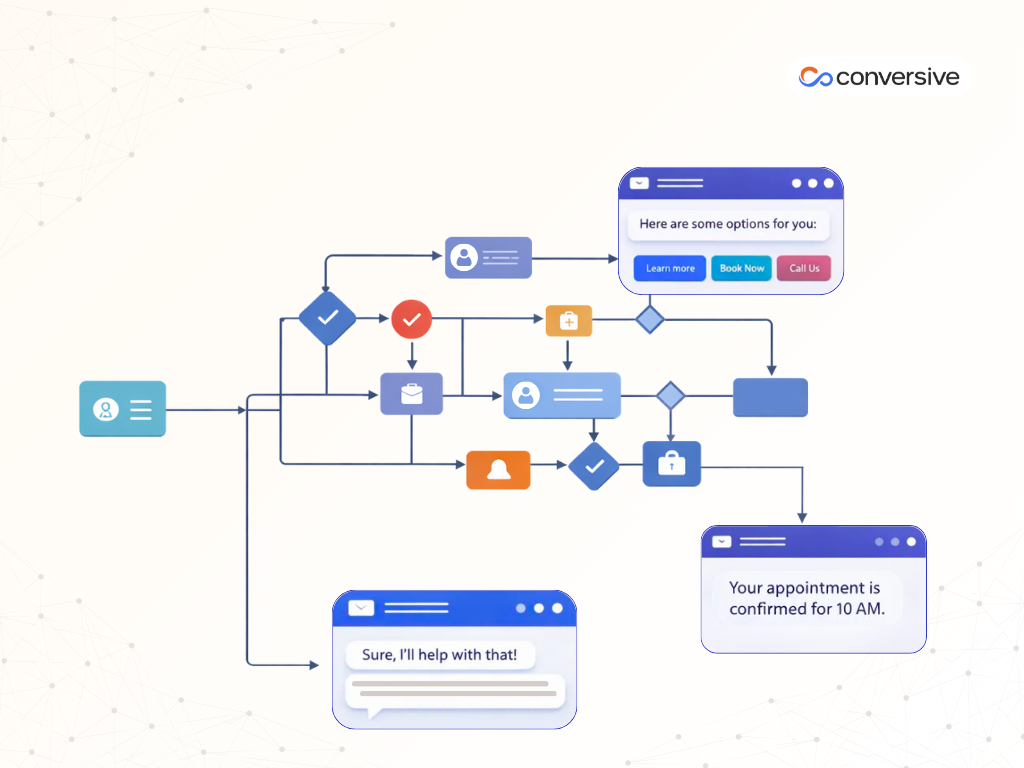

You can use AI chatbots and recommendation systems to make sure people are treated fairly throughout their entire experience with a product or service. Here’s a quick overview of common AI use cases and how we keep them ethical.

The goal is never to manipulate, but to assist and enhance.

Top 10 Ethics of AI in CX

These foundational principles act like safety guidelines for creating, using, and managing AI. Following these rules helps avoid accidental problems and builds trust with people everywhere.

- Transparency: Always inform users when AI is in play. Clear labeling builds trust and sets realistic expectations.

- Consent: Give users control over their data and how it’s used. Honor their choices, opt-in should mean something.

- Fairness: Audit systems to detect and reduce bias. Ensure AI treats people equally, regardless of age, race, gender, or background.

- Privacy: Minimize data collection. Anonymize and secure personal data by default.

- Accountability: AI doesn’t absolve responsibility. Keep humans in charge of critical decisions and outcomes.

- Explainability: Make AI decisions understandable. Users should understand how and why an AI made a decision.

- Security : Protect data with strong encryption and access controls. Prevent data leaks and unauthorized access.

- Inclusivity: Design with diverse users in mind, from language to accessibility. What works for one shouldn’t exclude many.

- Sustainability: Limit unnecessary data processing and carbon impact.

- Empathy: Teach AI to recognize and respond to emotion appropriately. When AI shows it understands emotions, people will trust and connect with it more.

These aren’t just buzzwords, they’re our operating principles.

Ethical AI: What You Need to Know (and Actually Use)

If you're using AI in your business, especially in customer experience, there's a big question that’s no longer optional, which is, Are you using AI responsibly?

As a brand, you need to take one thing seriously, AI is no longer just a tech tool, it’s a decision-maker, a brand voice, and a trust-bearer. And with great power comes, well, legal obligations, ethical expectations, and a lot more scrutiny.

So let’s make it simple. There are three major frameworks you need to know if you're working with AI today:

- The OECD AI Principles (your global compass)

- The EU AI Act (your legal guardrails)

- The ISO/IEC 23894:2023 Standard (your risk management playbook)

Here’s how they work together, and why they matter for your team.

1. The OECD AI Principles: Your Ethical GPS

Think of the OECD AI Principles like the moral compass for AI. They don’t tell you what speed to drive at (that’s the EU’s job), but they do tell you which direction is right.

First introduced in 2019 and refreshed in 2024, these principles are recognized by nearly 50 governments as the foundation for trustworthy and human-centered AI.

Here’s the simple version:

- AI should benefit everyone. It’s like a big wave raising everything around it, not just the fancy boats.

- Respect human rights and fairness. No hidden biases or black-box decisions.

- Be transparent and explainable. If the AI is making a call, users should understand how.

- Keep it safe and robust. Like airbags in a car, always ready, always tested.

- Hold someone accountable. If it goes wrong, someone needs to step up.

These principles have shaped over 1,000 AI policies worldwide. If you're building or using AI, even in something as routine as customer service automation, this is your ethical anchor.

2. The EU AI Act: The Law of the AI Land

Now, if the OECD Principles are your GPS, the EU AI Act is the actual road law.

Adopted in 2024, it’s the world’s first comprehensive legal framework for AI, and yes, it’s enforceable. That means fines, audits, and real-world consequences for getting it wrong.

So, how does it work?

The EU AI Act sorts AI systems into following four risk categories:

1) Unacceptable Risk: These are the things in AI that are considered harmful, like systems that judge people’s social status or manipulative behavioral systems.

2) High Risk: This is where most professional AI tools are stored. If your AI affects things like hiring, credit decisions, identity checks, or public safety, this is the area it belongs to. You’ll need to show that you’ve thought about the risks, kept records of how the system works, made sure humans are involved, and have plans to handle any problems that might come up.

3) Limited Risk: These systems mostly require transparency. If your chatbot is AI-powered, for example, you’ll need to tell the user.

4) Minimal Risk: This is not that alarming, AI that suggests playlists or helps change the way your emails sound. There's no complicated rules, but it's still important to watch out for any unintended mistakes or problems.

The ban was initiated in February 2025, and stricter rules will be put into place gradually through 2027. Fines for breaking the rules can be as high as €35 million or 7% of the company's total worldwide income.

Bottom line: If you’re using AI in CX, sales, HR, or product features, you need to know where your system fits. It’s no longer a choice, it’s law.

3. ISO/IEC 23894:2023: The Risk Management Playbook

Finally, once you know what's expected and what's legal, you’ll need a how-to guide to do it right.

That’s where ISO/IEC 23894:2023 comes in. It’s like a recipe for safe AI, built by experts, recognized globally, and increasingly expected by regulators and partners.

Here’s what it helps you do:

- Establish clear risk management principles. Transparency, accountability, improvement, it’s similar to the safety checklist before every flight.

- Create a formal risk management structure. Define roles, responsibilities, and processes across your team.

- Apply a lifecycle mindset. Risk doesn’t end at deployment. Like maintaining a car, your AI needs regular checkups.

By following this standard, you're not just protecting your brand, you’re building systems that are smarter, safer, and more sustainable.

So What Does This All Mean for You?

If you’re building AI for customer experience, this isn’t just about checking legal boxes. It’s about building trust.

Every time your AI suggests a product, answers a support query, or flags a risky transaction, it’s shaping how customers perceive your brand. Do they feel heard? Respected? Safe?

Following ethical and legal frameworks isn’t just the right thing to do, it’s a competitive advantage.

So here’s your to-do list:

- Use the OECD Principles to guide your AI values

- Follow the EU AI Act to stay compliant and avoid penalties

- Adopt ISO 23894 to manage risks the smart way

This is how you lead in the age of AI, not just with better algorithms, but with better choices.

Ready to Build CX Your Customers Can Trust?

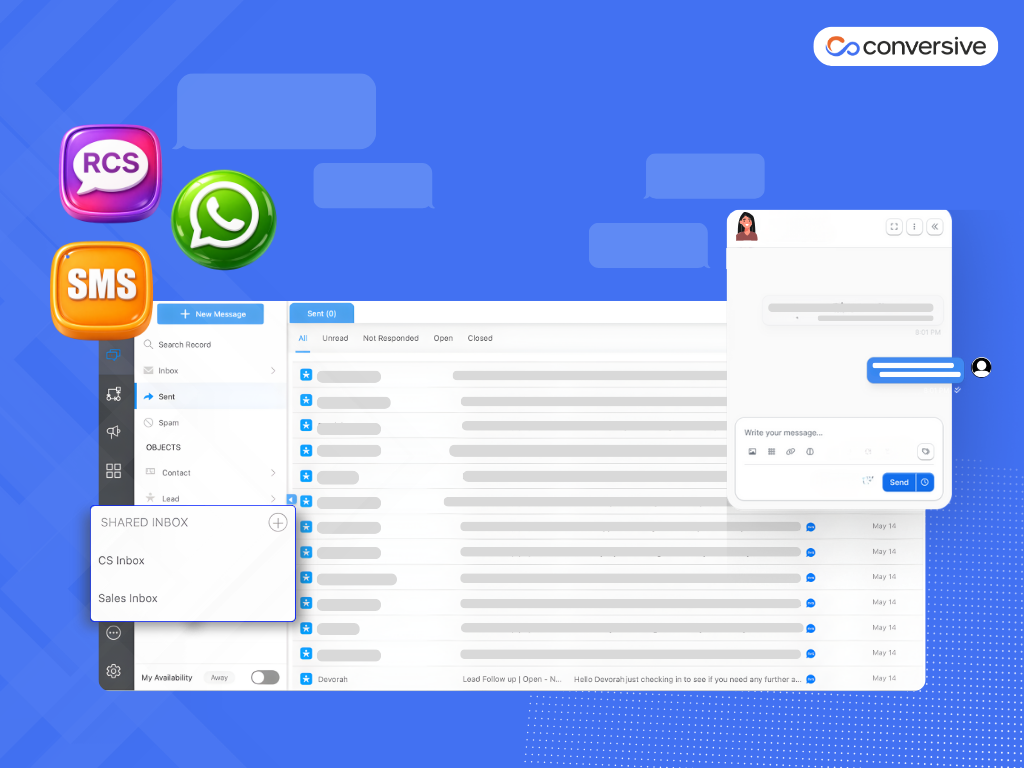

Customers today don’t just expect personalization; they expect principles. And at Conversive, we help companies deploy AI solutions that are not only powerful, but principled. From consent management to ethical chatbot design, we’ll guide you every step of the way.

When we build CX strategies with consent, transparency, and trust at the core, we’re not just delivering better service, we’re building better relationships.

Schedule your free AI ethics audit today

Let’s start a conversation →

Frequently Asked Questions

1. What is ethical AI in customer experience?

Using AI in ways that are fair, transparent, and respectful of customer rights.

2. Why is AI transparency important in CX?

It builds customer trust and reduces frustration with automated systems.

3. What are some AI ethics best practices?

Bias checks, informed consent, explainable decisions, and human fallback options.

4. Can small businesses implement ethical AI?

Yes, even simple disclosures and opt-ins make a big difference.

5. What are the risks of unethical AI in CX?

Loss of trust, legal penalties, reputational damage, and customer churn.

.png)