How Can Businesses Build AI That Consumers Actually Trust In?

With 61% of consumers hesitant to trust AI, concerns about privacy, bias, and transparency are growing. Discover why trust in AI is broken, and how ethical design and clear communication can help rebuild it.

Would you trust a faceless algorithm to decide your insurance premium, diagnose a health condition, or resolve a complaint, without ever understanding how it works?

That’s no longer a hypothetical. AI is now actively involved in things we used to think only humans did, like helping us when we contact customer service or recommending products as we shop online. The key point is that AI can do these tasks automatically and quickly. However, unlike humans, AI doesn’t naturally understand feelings (empathy) or explain why it makes certain decisions unless it’s specifically programmed to do so.

AI is no longer just starting out, it is already fully a part of many aspects of our lives like shopping, healthcare, legal, education, finance and travel. Even though AI can be very useful, many people are becoming more hesitant to trust it. 61% of global respondents are wary about trusting AI systems. There's growing doubt about whether these systems are reliable and safe. According to Pew Research, 78% of US adults trust themselves to make the right decisions about their personal information, but 61% feel skeptical that anything they do will make much difference. While 64% of consumers say companies that provide clear information about their privacy policies enhance their trust.

In this article, I’ll unpack what’s really driving consumer skepticism toward AI, from privacy concerns and algorithmic bias to transparency gaps and cultural nuances. You’ll discover:

- Key stats revealing how consumers really feel about AI today

- Real-world examples of trust lost, and rebuilt

- Proven frameworks and practical CX strategies to design AI systems people actually believe in

Let’s dive into the core of it all, consumer trust in AI, why it’s broken, how it’s earned, and what it means for the future of customer experience.

How Consumers Really Feel About AI

Let’s get one thing straight, consumers aren't rejecting AI as a concept. They're rejecting the way it often shows up in their lives, which is, unexplained, impersonal, and too often, invisible until something goes wrong.

You can feel this tension in the numbers:

- 68% of global consumers are somewhat or very concerned about online privacy. (IAPP)

- 57% believe AI poses a significant threat to their personal data. (IAPP)

- 61% say they’re hesitant to trust AI-driven systems. (Termly)

- Only 17% of consumers find AI privacy and security policies clear—and those that do are 4x more likely to trust it. (Deloitte)

Less than 20% of people really understand what happens to their personal data. In a time when AI can decide important things like whether you're approved for a loan, hired for a job, or given medical advice, not knowing how your data is used is a serious problem. It’s not just a minor issue, it’s a cause for concern because it affects how much control you have over your own information.

Let’s say, you fill out an online loan application. You see a simple form asking for your income, employment status, and credit history. After submitting, you get a decision - “Approved” or “Denied”. You never see how the bank’s AI analyzed your data to make that choice, leaving you in the dark about what factors influenced their decision.

But here’s where it gets more nuanced.

As per Axios, in China, 72% of consumers say they trust AI, however, in the U.S., that number drops to 32%.

The difference is because of how each country’s culture and government think about and handle technology and privacy. In China, people are used to governments and companies using data and technology to make decisions and run things smoothly. In the U.S., there is more focus on individual rights and privacy, so people worry more about how their personal information is used and prefer decisions made with personal choice in mind.

These numbers and data are more than just facts, they send important emotional signals. They make people feel worried or anxious about things like - Who is watching me? Who gets to make decisions about me? And if something seems wrong, can I do anything about it?

It's like walking into a store where every worker already knows your last ten purchases, what websites you've looked at, how much money you have, and even what gift you considered for someone. But you have no idea how they found out this information or what they plan to do with it. That’s what using AI sometimes feels like.

At this point, companies need to decide, either develop AI technology that they are honest and clear about, so people trust it, or risk making customers feel uncomfortable and turning them away.

Having trust isn’t something that just happens accidentally when using AI, it’s something you need to earn first.

The Fallout: When AI Breaks Trust

AI can help people in many ways but if it’s used without careful control or supervision, it can cause problems. For example, social media programs might spread anger or arguments, and systems that decide who gets loans or credit might unfairly treat some people. These mistakes can make people distrust AI. When AI systems are not open about how they work or don't take responsibility for their actions, trust breaks down even more. This part explains how losing trust in AI affects society, and why it's important for AI to be clear and answerable.

1. Social Platform & Polarizing AI Recommendations

Some social media platforms faced criticism for recommendation engines that prioritize engagement, often sensational or controversial posts, which can make people's opinions more extreme and make political disagreements worse.

This process can create “filter bubbles”, where users only see ideas they already agree with, and it can even lead some users to become more radical or extreme in their beliefs. This hurts trust in social media and makes people worry about how fair and open these recommendation systems are.

For instance, a news app that mainly shows users sensational headlines about celebrities or scandals, rather than balanced news coverage, ends up creating a bubble where users only see extreme or biased stories, making them distrust other perspectives.

2. Financial Services & Biased Credit AI

Fintech companies like Upstart use AI to look at different types of data that banks normally don’t use. This helps them give loans to more young people and those borrowing for the first time, they approved about 27% more loan applications. They also helped reduce the number of people who couldn’t pay back their loans by 16%. Experts warn that these AI systems might accidentally include unfair or biased judgments if they aren’t carefully checked and explained.

AI decides who qualifies for loans based on patterns. In 2019, Apple’s Apple Card AI gave men much higher credit limits than women, even when they had identical financial backgrounds. The company couldn't fully explain why.

The Bounce Back: Trust Rebuilt by Design

If AI is developed carefully, it can become trusted again by fixing real issues clearly and dependably. For example, AI can help doctors find diseases more quickly, keep people's money information safe, and respond better during emergencies. When the people who make AI focus on making it accurate, respectful of privacy, and giving users control, it can restore people's trust and make them feel more comfortable with using technology.

1. Healthcare

In healthcare, people are starting to trust AI more because it helps doctors and patients. AI tools can look at images, like scans, and find problems very accurately, up to 97% correct, which is better than some human experts who are correct about 86% of the time.

Besides being accurate, AI also works faster, cutting down the time to analyze images by up to three-quarters. This means patients get diagnoses sooner, wait less, and hospitals can give better care, especially when they are busy. The AI doesn’t replace doctors but works alongside them, making healthcare more reliable.

2. Fintech

Plaid, a fintech company built secure APIs for users to connect their bank accounts to different apps. It made sure that users knew what information was being shared and allowed them to choose exactly what data they wanted to share. The company focused on keeping data safe and giving people control over their information. Because of this, many apps used their service, and users and developers trusted their system because it was clear and protected their privacy.

3. Disaster Readiness

Japan improved its system for detecting earthquakes by using deep learning. This made the earthquake alerts more accurate, catching 70% more earthquakes and making fewer wrong alarms. Because the alerts became more trustworthy, people started to trust that the government’s use of AI is helpful and reliable for keeping them safe during disasters.

Takeaway

Trust in AI isn’t just a small feature or a simple part of the design. Instead, it’s something you build through careful planning, making honest decisions, and continually teaching users about how the product works. Experiences where things go wrong and then are fixed show that trust is earned by being open about what’s happening, treating people fairly, and focusing on their needs at every step of creating and improving artificial intelligence (AI).

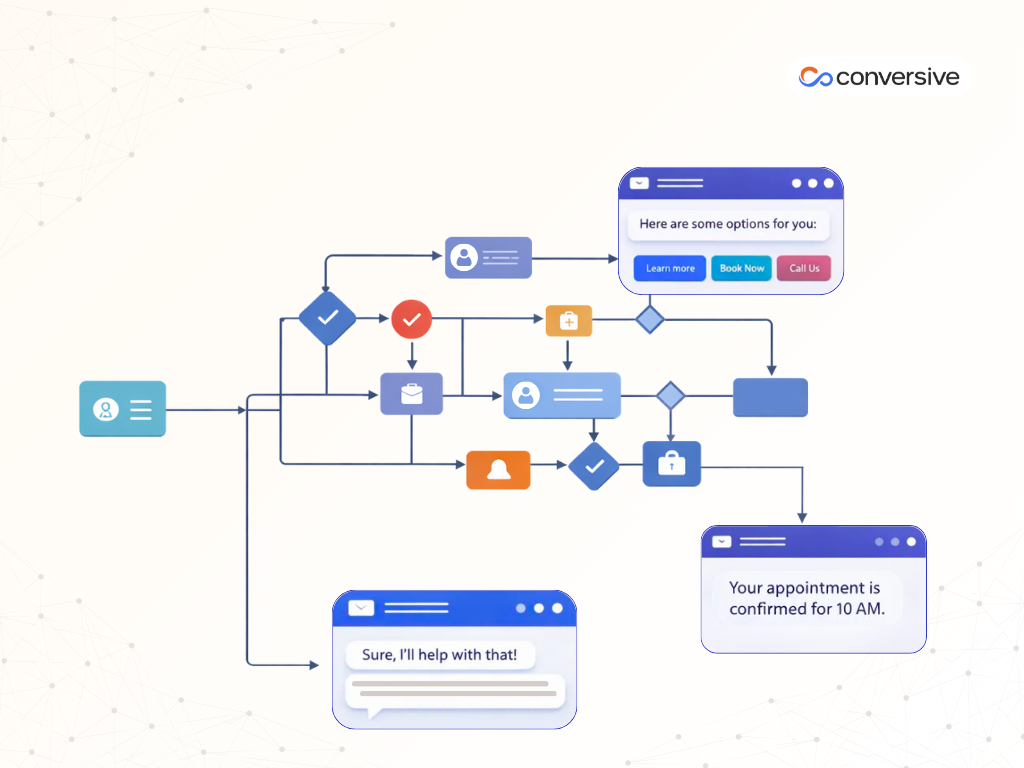

How Do You Design AI People Actually Trust?

Let’s face it, many people don’t trust what they don’t understand. If AI feels like a black box, people will definitely opt out. If you're building AI into a shopping app, a loan system, or a medical tool, trust isn't a nice-to-have, it's the foundation. Here’s how to design for it:

1. Explain Things Like You're Talking to a Human

Drop the jargon. People don’t need to know what a “neural network” is, but they do deserve to know why your AI made a decision.

Use Case: A spam filter in your email program deciding whether an email is spam or not by looking at the words, sender, and other clues, and then explaining, “This email is marked as spam because it has words often used in spam messages”, instead of just saying “We used AI to make the decision”.

Pro tip: If your mom or your intern can’t understand it, your users probably won’t either. Run your copy by both.

2. Show People What You’re Collecting, and Why

It’s one thing to say “we collect data”, it’s another to show exactly what, when, and how. A simple dashboard or visual explainer goes a long way.

Use Case: Imagine a coffee shop tracking how many customers buy coffee each day. They record the number of customers at the register every morning. To make it simple, they create a chart with days of the week on the bottom and number of customers on the side. This visual shows exactly when and how many people are buying coffee, making it clear what data is collected, when, and how they see patterns.

Pro tip: A timeline view (e.g., “we saved this purchase on June 2”) builds more trust than a vague privacy statement.

3. Let People Control Their Own Data Story

People should be able to pause, reset, archive, or delete their data like they clear browser history. This makes them feel in charge, not tracked.

Use Case: A photo-sharing app where you can easily remove any photo you've uploaded or hide it from your profile at any time, just like deleting browsing history.

Pro tip: Add a “reset recommendations” button and see how much more confident users feel about personalization.

4. Make It Clear They Have a Say

Give people choices about how their data is used, whether for personalization, training models, or nothing at all. One-size-fits-all opt-ins don’t cut it anymore.

Use Case: When signing up for a streaming service, you are asked if you'd like to allow your viewing habits to be used to improve recommendations, if you're okay with your data helping train new AI features, or if you'd prefer your data not to be used at all.

Pro tip: Break consent into bite-sized toggles. The more granular the control, the less creepy it feels.

5. Don’t Just Say It, Show Your Work

If your AI recommends a product, loan, or doctor’s note, explain why. A little “because you liked X” message makes the system feel more transparent and human.

Use Case: You receive a movie suggestion from a streaming service with the message, “We recommend this movie because you watched similar action films”.

Pro tip: Even a short “We thought this might help because.” tag next to a suggestion increases engagement and trust.

6. Be Honest When You’re Not 100% Sure

AI isn’t magic. It gets things wrong. Show confidence levels or ranges, don’t act like your system is always right.

Use Case: A weather app shows a forecast with a 70% confidence level: "It will rain tomorrow," but also notes there's a 30% chance it won't.

Pro tip: Replace “yes/no” with ranges like “highly likely” or “low confidence”. People trust systems that admit uncertainty.

7. Keep Humans in the Driver’s Seat

Especially in sensitive areas, like health, hiring, finance, AI should assist, not replace. Let a person make the final call.

Use Case: A doctor reviews an AI-generated diagnosis but makes the final decision after considering their own assessment and patient history.

Pro tip: Add an easy “escalate to a human” button. Frustrated users won’t have to fight a robot to get help.

8. Stress-Test for Fairness

Run audits to make sure your system treats everyone fairly, across gender, race, age, and more. If something’s off, fix it fast.

Use Case: Imagine a company’s online job application process automatically shortlists candidates. A fairness audit finds that most shortlisted candidates are men, even though there are qualified women applying. The company then adjusts the process to ensure it considers women equally, leading to a more diverse set of shortlisted candidates.

Pro tip: Don’t just test your AI on averages. See how it performs on the edges, marginalized users are often the canaries.

9. Keep the Conversation Going

Trust isn’t built once, it’s maintained. Share transparency reports. Host Q&As. Give people a way to understand how your system evolves.

Use Case: A company sends out a quarterly report detailing its data privacy practices and updates, then holds a live online session where customers can ask questions about new features and how their information is protected.

Pro tip: Add a “What’s new with our AI?” section to your help center. It shows you’re not hiding behind the curtain.

10. Teach People How to Use AI, Without Preaching

Most people aren’t AI experts, and that’s okay. Give them explainer videos, short guides, or tooltips when they need them, not in a 50-page whitepaper.

Use Case: Imagine you’re setting up a new smartphone. Instead of flipping through a long manual, you get quick pop-up tips on the screen that explain how to connect Wi-Fi or take a photo when you need them.

Pro tip: Micro-learning beats manuals. Think 30-second explainers, not hour-long webinars.

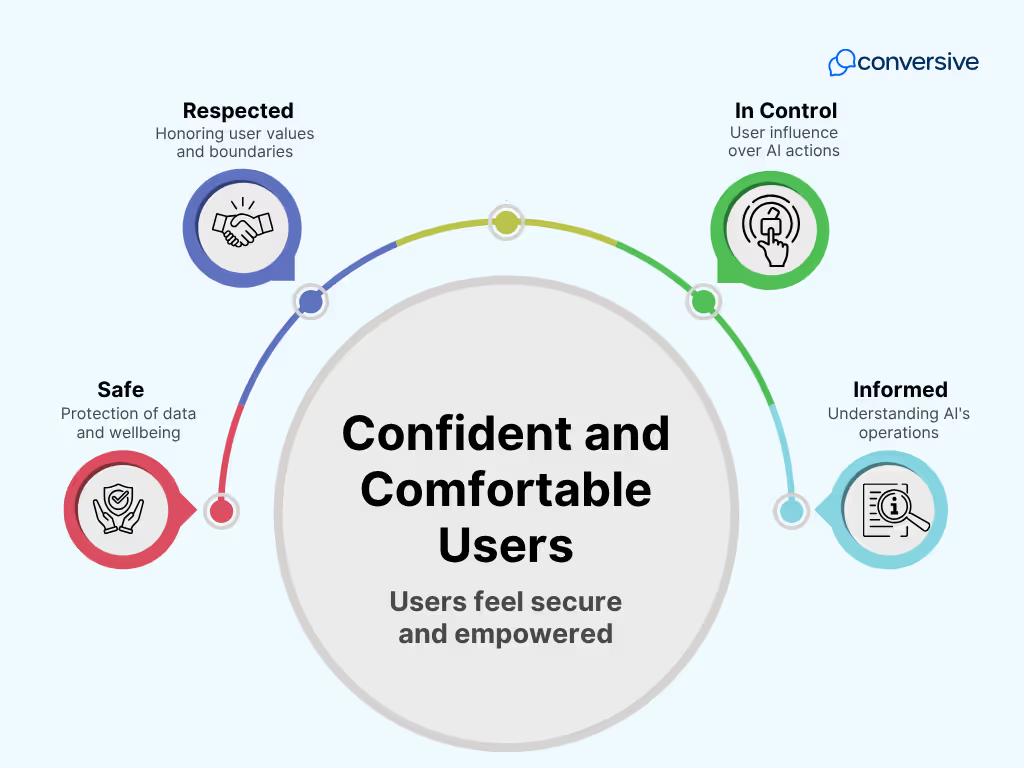

Trust-Building Pillars in AI Design

Trust isn’t built with one button, it’s woven into every interaction. When people feel informed, in control, respected and safe, they don’t just use your AI. They believe in it.

Trustworthy AI Starts Here, Conversive Makes It Easy

If you're powering customer conversations, decision support, or internal automation, your users and teams deserve to know that your AI is transparent, ethical, and safe. That’s where Conversive comes in.

Conversive is purpose-built to help businesses deploy responsible AI, without slowing innovation. We make it easy to build, monitor, and manage AI systems that people can trust, right out of the box. Here are a few features:

1. Built-In Ethics and Transparency

Conversive gives you full visibility into data flows, model logic, and decision-making, so your AI earns user trust from day one.

2. Human-in-the-Loop by Default

Empower teams to review, validate, and intervene where needed. Conversive keeps humans in control of critical moments.

3. Compliance-First Architecture

Stay ahead of regulatory demands with enterprise-grade support for GDPR, HIPAA, and other data protection standards.

4. Bias Detection & Mitigation

Proactively uncover and fix bias in models before they go live, using built-in fairness and audit tools.

5. Trusted Conversations Powered by AI

Enable AI that’s not just smart, but respectful, adaptive, and aligned with user intent and tone.

6. Real-Time Monitoring & Alerts

Track AI performance continuously, get notified of anomalies, and intervene before issues escalate.

7. Plug-and-Play with Guardrails

Get started fast with pre-built components, without compromising on control, safety, or governance.

8. Trusted by Teams Across Industries

From finance to healthcare to CX, Conversive powers AI where trust is business-critical.

Ready to put trust at the core of your AI strategy? Book a demo today and see how Conversive makes trustworthy AI easy.

Frequently Asked Questions

1. Why do many consumers hesitate to trust AI systems?

Because AI often feels unexplained, impersonal, and invisible until something goes wrong.

2. How does lack of transparency affect consumer trust in AI?

When users don’t understand how AI makes decisions or how their data is used, trust decreases significantly.

3. What role does data privacy play in consumer trust?

Clear privacy policies and giving consumers control over their data greatly increase trust in AI systems.

4. How can AI bias impact consumer trust?

If AI treats some groups unfairly, consumers lose confidence in its reliability and fairness.

5. Why is human oversight important in building trust with AI?

Keeping humans in control of critical decisions reassures consumers that AI won’t make unchecked errors.

6. How do cultural differences influence trust in AI?

Trust levels vary by region, depending on how societies view privacy, data use, and government regulation.

7. Can admitting uncertainty improve trust in AI?

Yes, showing confidence levels or admitting when AI isn’t 100% sure helps users trust the system more.

8. What practical steps can companies take to build consumer trust in AI?

Explain decisions clearly, show what data is collected, let users control their data, and maintain ongoing transparency.

9. How does Conversive help businesses build AI that consumers trust?

By providing transparency, bias detection, human-in-the-loop control, and compliance features designed to earn user trust.

.png)